JungMok LeeI am a 2nd-year Master student at AMI Lab, where I work on multimodal perception, advised by Tae-Hyun Oh. I major in Department of Electrical Engineering at POSTECH. I'm interested in computer vision, multimodal large language model, machine learning, and visual interpretation. Email / GitHub / Google Scholar / LinkedIn |

|

Publications & Research |

|

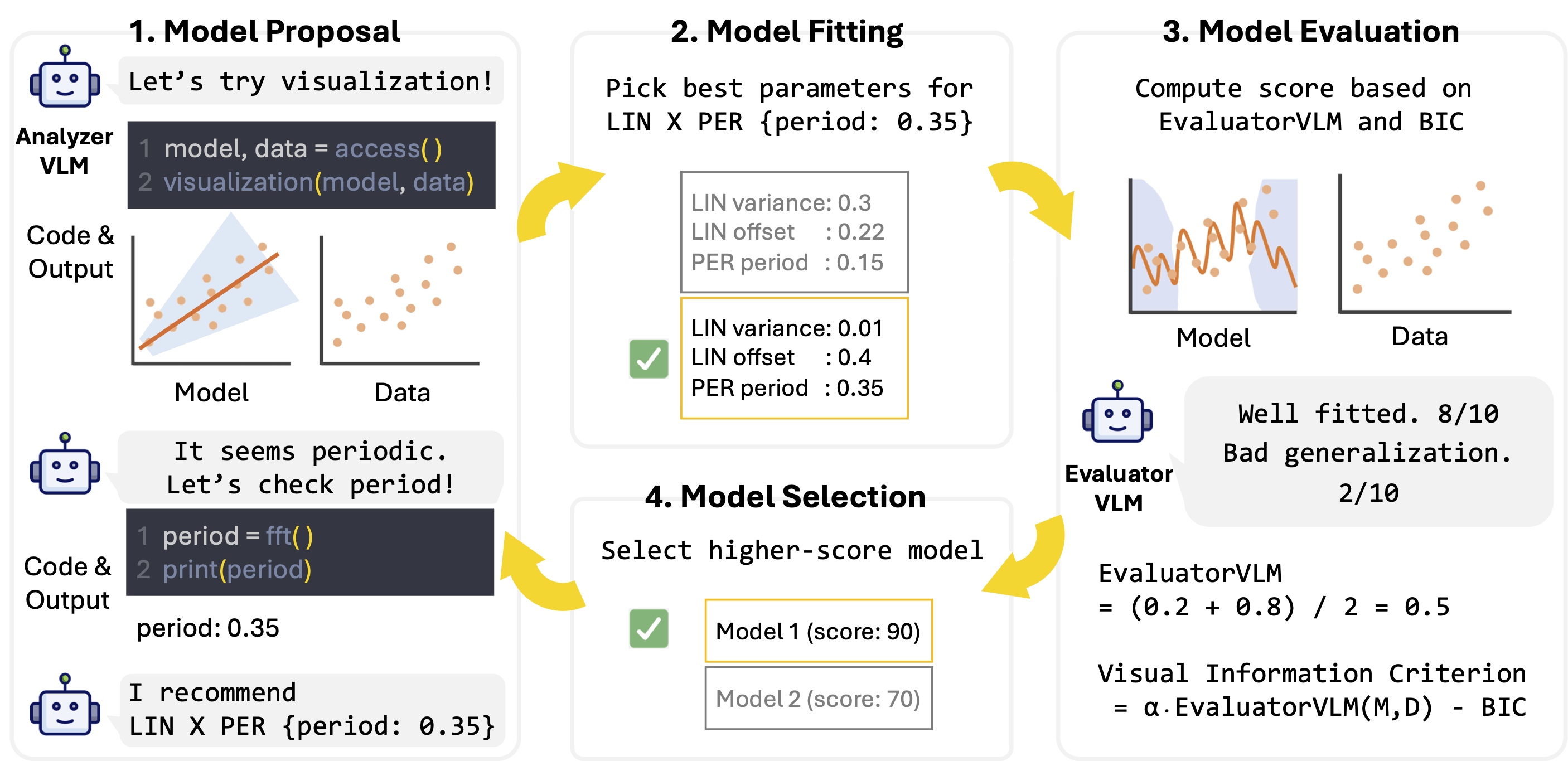

Automated Model Discovery via Multi-modal & Multi-step PipelineLee Jung-Mok, Nam Hyeon-Woo, Moon Ye-Bin, Junhyun Nam, Tae-Hyun Oh NeurIPS 2025 [project page] [arxiv] [code] We present a multi-modal & multi-step pipeline for effective automated model discovery, using the multimodal LLM agents. We model the interpretation of time-series data using Gaussian Process kernel discovery. |

|

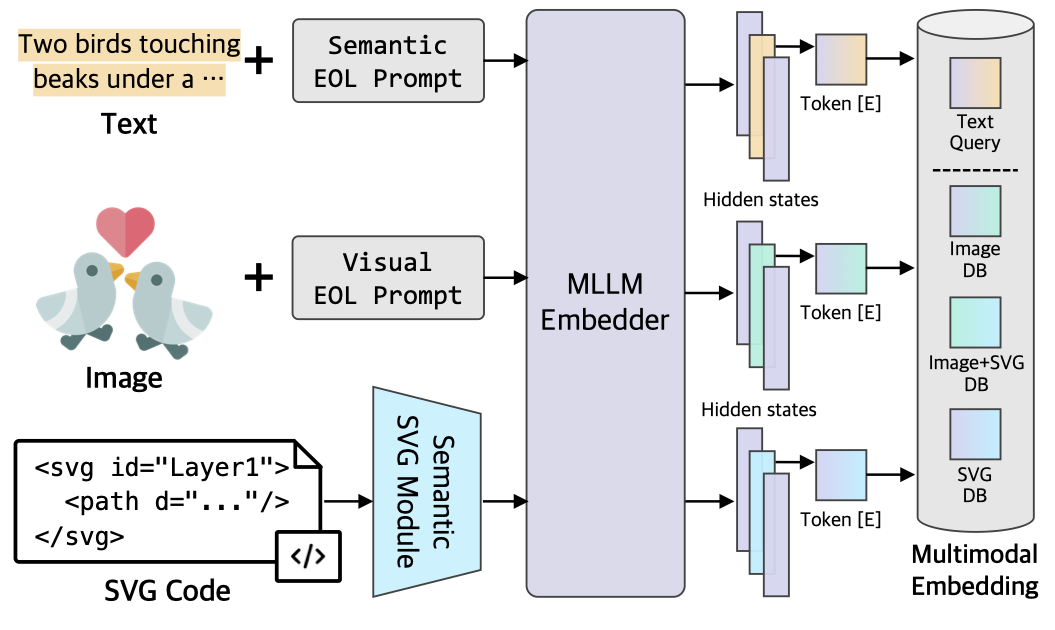

Training-free Multimodal Embedding for Structure-Aware Retrieval of Scalable Vector Graphics and ImagesKyeongseon Kim, Baek Seong-Eun, Lee Jung-Mok, Tae-Hyun Oh WACV 2026 [project page] [arxiv] [code] We propose the first training-free multimodal embedding method that uses a Multimodal Large Language Model (MLLM) to project text, images, and SVG code into an aligned space. |

|

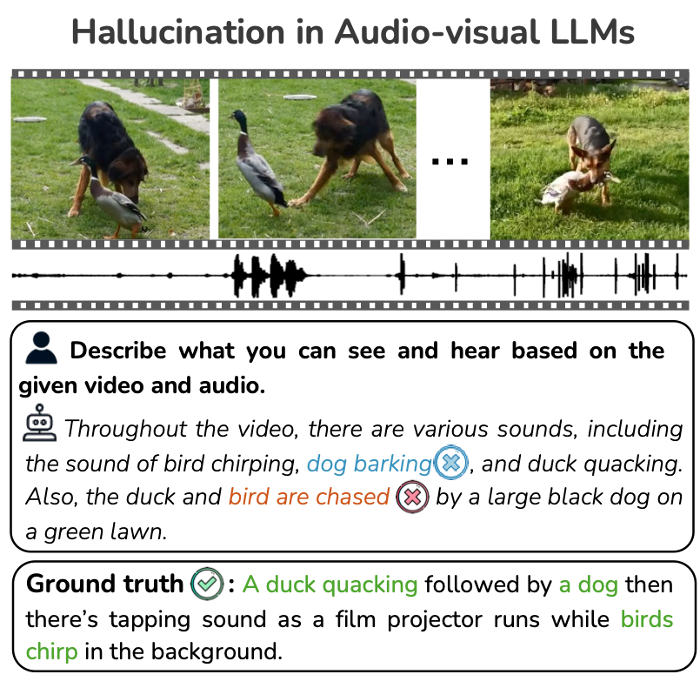

AVHBench: A Cross-Modal Hallucination Benchmark for Audio-Visual Large Language ModelsKim Sung-Bin*, Oh Hyun-Bin*, Lee Jung-Mok, Arda Senocak, Joon Son Chung, Tae-Hyun Oh ICLR 2025 [project page] [arxiv] [code] We introduce a comprehensive audio-visual hallucination benchmark specifically designed to evaluate the perception and comprehension capabilities of audio-visual LLMs. |

|

Design and source code from Jon Barron's website |